Google’s AI Overviews Links Update: What Changed, Why CTR May Shift, and What Marketers Should Monitor

.webp)

Google updated AI Overviews and AI Mode to make sources and outbound links more prominent, including clearer link indicators and a more visible way to access source lists. Because this changes how users interact inside the AI module, click behavior can shift even if traditional organic rankings stay the same. Marketers should audit which pages are being cited, what passages are being pulled, and whether those cited sections support the intended message and next step. Set up a pre/post monitoring loop for queries that trigger AI results, then improve on-page structure so the most cite-worthy parts of your content are the parts you want users to see.

More prominent citations inside AI answers can change where attention and clicks go.

Key takeaways

- Treat AI Overviews and AI Mode as distinct SERP modules with their own click behavior.

- Measure impact using a defined set of AI-triggering queries and compare pre/post performance.

- Improve snippet readiness with clear headings, scannable sections, and explicit definitions.

- Make landing pages work for high-intent, low-patience clicks with fast load, above-the-fold clarity, and obvious next steps.

What Google changed in AI Overviews and AI Mode link visibility

Google has updated how links and sources appear within AI Overviews and AI Mode, with the goal of making sources and outbound links more obvious inside the AI response itself. Instead of requiring extra effort to find where an answer came from, the interface now more clearly highlights that the response is supported by links to external pages.

As described in reporting on the update, the presentation includes clearer link indicators and a more visible way to access the sources behind the AI response, such as source lists that can be revealed via interaction (for example, hovering) and more recognizable link icons. The practical effect is that sources are easier to notice and potentially easier to click when users are scanning the AI output.

This matters because AI Overviews and AI Mode behave like their own SERP modules. Standard “blue link” results tend to distribute attention down the page in a familiar pattern, but an AI module concentrates attention inside a self-contained interface. When links inside that interface become more prominent, user behavior can shift within the module without any change to traditional rankings.

Why this matters: CTR mechanics can change even if rankings do not

CTR is not only a function of rank. It is also a function of interface design, attention cues, and how many “click opportunities” exist before a user reaches standard organic listings. When Google changes the visibility of sources and link affordances inside AI Overviews and AI Mode, it changes the incentives for where users click and how quickly they decide to click.

In an AI module, users may do one of three things: stop because the summary is sufficient, click a cited source because it feels trustworthy, or continue scrolling to other results. More prominent links can increase the chance of the second behavior, but the distribution of those clicks depends on whether your site is cited, where your citation appears, and how compelling the anchor context is within the AI response.

That introduces the potential for winners and losers that is not strictly tied to classic ranking improvements:

- Winners can be pages that are cited prominently and align well with the user’s next step, capturing high-intent clicks directly from the AI module.

- Losers can be pages that still rank well but receive fewer clicks because attention is absorbed by the AI module, or because competitors are cited more prominently inside it.

Teams that rely on predictable SERP click patterns should treat this as a cross-functional issue. SEO needs to know which queries trigger AI modules and which pages are cited. Content teams need to ensure the cite-worthy sections reflect the intended narrative. Analytics teams need to separate UI-driven changes from ranking-driven changes so decisions are based on the right diagnosis.

Audit: which of your pages are being cited and what section is getting pulled

Audit flow: query themes → cited pages → the exact passage Google attributes to you.

Start with an audit designed around query themes, not just a handful of head terms. The goal is to map where AI Overviews or AI Mode appear in your category and to understand whether you are present inside those modules.

- Identify query themes where AI Overviews or AI Mode show up. Build a short list across informational, comparison, and “how to” intents that are relevant to your product or content areas.

- Check for citation presence and record which URLs are referenced. Separate “homepage-level” citations from deep content citations because the optimization path is often different.

- Capture the cited context by noting what claim, definition, or step is being attributed to your page. This helps you see which passages Google is choosing to surface.

Next, evaluate whether the cited passage matches your intended positioning. A citation can be “good” from a traffic perspective but still problematic if it highlights an outdated statement, an edge-case interpretation, or a phrasing that does not match how you want to be understood.

Finally, evaluate the conversion path clarity from the click. If a user clicks from an AI module, they are often in a high-intent, low-patience mode. They are validating a point, looking for specifics, or seeking a next step. Confirm that the landing page and the section most related to the cited content makes the next action obvious, such as reading the next section, exploring a related resource, or taking a conversion-oriented step appropriate to the query intent.

Optimization checklist: make content easier to cite (and more useful after the click)

Optimizing for AI citations is largely about making it easy for systems to extract the right passage and for users to feel satisfied when they arrive. You are improving “snippet readiness” and “post-click usefulness” at the same time.

- Use clear headings for key questions and subtopics. Structure pages so that each major question has a dedicated section. If a page covers multiple ideas, make each idea easy to locate with descriptive headings.

- Make sections scannable. Keep paragraphs focused, and use lists where it improves clarity. If a user lands and can instantly see the relevant section, the click is less likely to bounce.

- Include explicit definitions for core concepts. If there is a term people commonly ask about, define it plainly and unambiguously in a dedicated section. Clear definitions are easier to summarize and cite than implied explanations.

- Reduce ambiguity in critical statements. Prefer precise language over vague phrasing when summarizing key points. The less interpretation required, the more likely the “right” meaning is carried into a citation.

Then optimize for the reality of AI-module traffic. These clicks can be fast and intent-heavy, so the landing experience should do more work immediately:

- Above-the-fold clarity. Make sure users can instantly tell they are in the right place and what they should do next.

- Speed hygiene. Keep pages performant so the value is delivered quickly after the click.

- Strong internal paths. Provide obvious routes to the next relevant resource or action, especially from informational pages that might be cited frequently.

If you want to apply this systematically, treat it like a content QA pass: identify the sections most likely to be cited, rewrite them for clarity, and confirm the page layout makes that section easy to find and act on.

Measurement playbook: monitor pre/post impact and separate UI shifts from ranking shifts

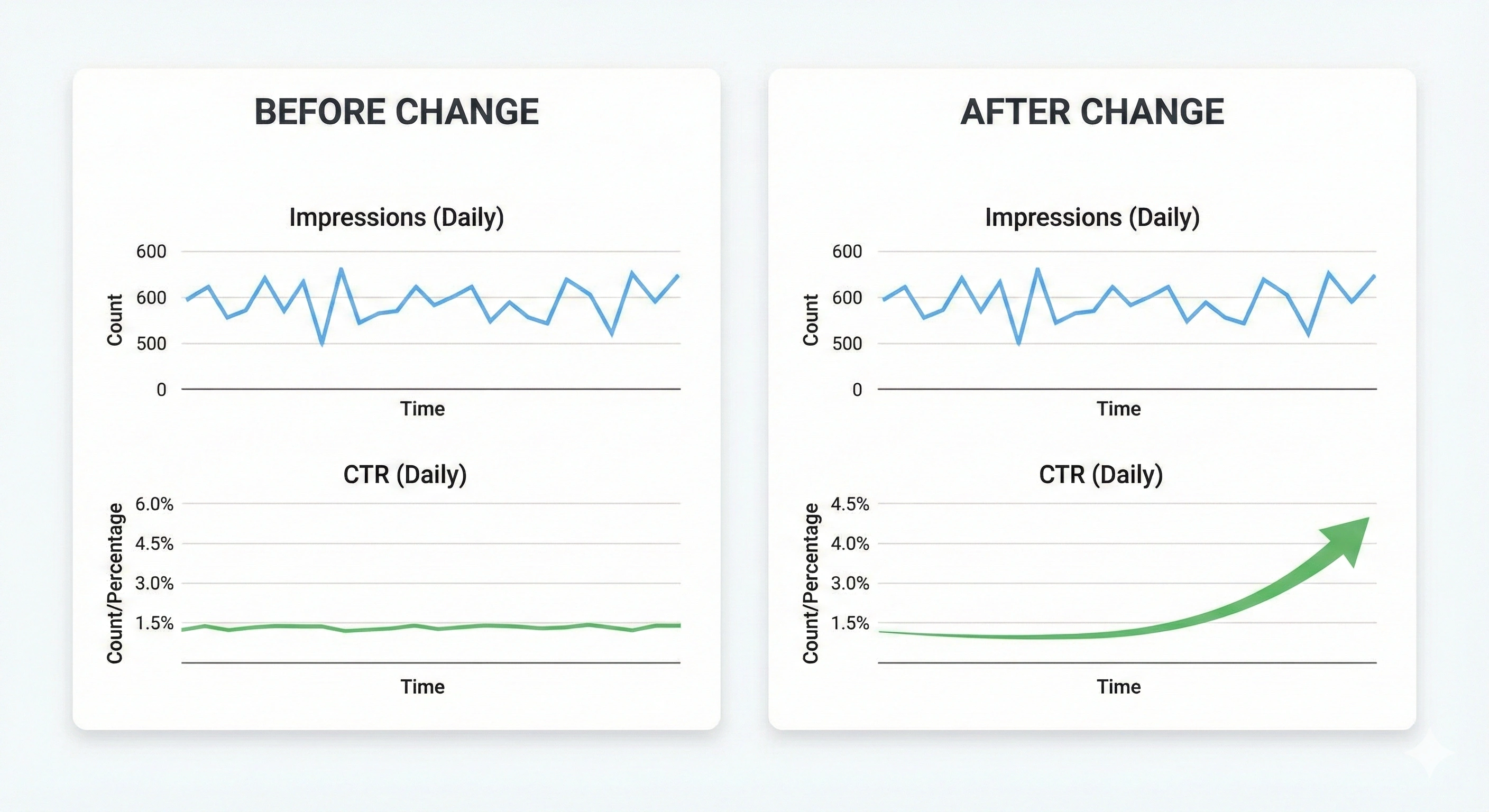

Compare pre vs. post: stable impressions with shifting CTR can signal an interface-driven change.

Because the update changes interface behavior, measurement needs to be designed to detect UI-driven shifts rather than assuming rankings are the only variable.

Build a stable query set where AI Overviews or AI Mode appear. Keep it consistent so you can compare trends over time. Include a mix of intents, and ensure the set reflects the queries that matter to your business rather than only the highest-volume terms.

For each query, monitor performance before and after the change using the metrics you rely on for search outcomes:

- Clicks and CTR to understand whether attention is moving toward or away from your listings or citations.

- Impressions to validate that demand and eligibility are not the only story. Avoid relying on impressions alone because impressions can stay stable while click behavior changes.

- Downstream outcomes where possible, such as engagement signals or assisted conversions, to understand whether the clicks you do receive are more qualified or less aligned than before.

Interpretation works best as a joint exercise between SEO, content, and analytics. If rankings are stable but CTR shifts, that suggests a UI-driven change or a change in how the AI module is attracting attention. If both rankings and CTR move, you may have overlapping causes. Either way, the action plan should connect measurement to page-level adjustments: update the sections being cited, strengthen above-the-fold clarity, and confirm the click leads naturally to the next step.

Sources

Frequently asked questions

What changed in Google AI Overviews links and sources?

Google made sources and outbound links more prominent in AI Overviews and AI Mode, including clearer link indicators and a more visible way to access source lists within the AI experience. The change affects how users notice and interact with citations inside the AI module.

Will Google’s AI Overviews links update affect SEO traffic and CTR?

It can. Because the update changes how links are presented inside AI modules, clicks can shift even when traditional organic rankings do not change. The impact depends on whether your pages are cited and how those citations appear within the AI response.

How do I measure the impact of AI Overviews or AI Mode on clicks?

Create a stable set of queries that trigger AI Overviews or AI Mode and track clicks and CTR over time, comparing pre- and post-change periods. Use impressions as context but do not rely on them alone, and add downstream outcomes where possible to understand traffic quality.

How can I optimize my pages to be cited in AI Overviews?

Improve snippet readiness by using clear headings, scannable sections, and explicit definitions for core concepts. Make key statements unambiguous, and ensure the landing page delivers fast, above-the-fold clarity with obvious next steps so a citation-driven click has a strong post-click experience.

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)