Face Recognition in Smart Glasses: A Marketer’s Readiness Guide for Consent, Brand Safety, and Measurement

.webp)

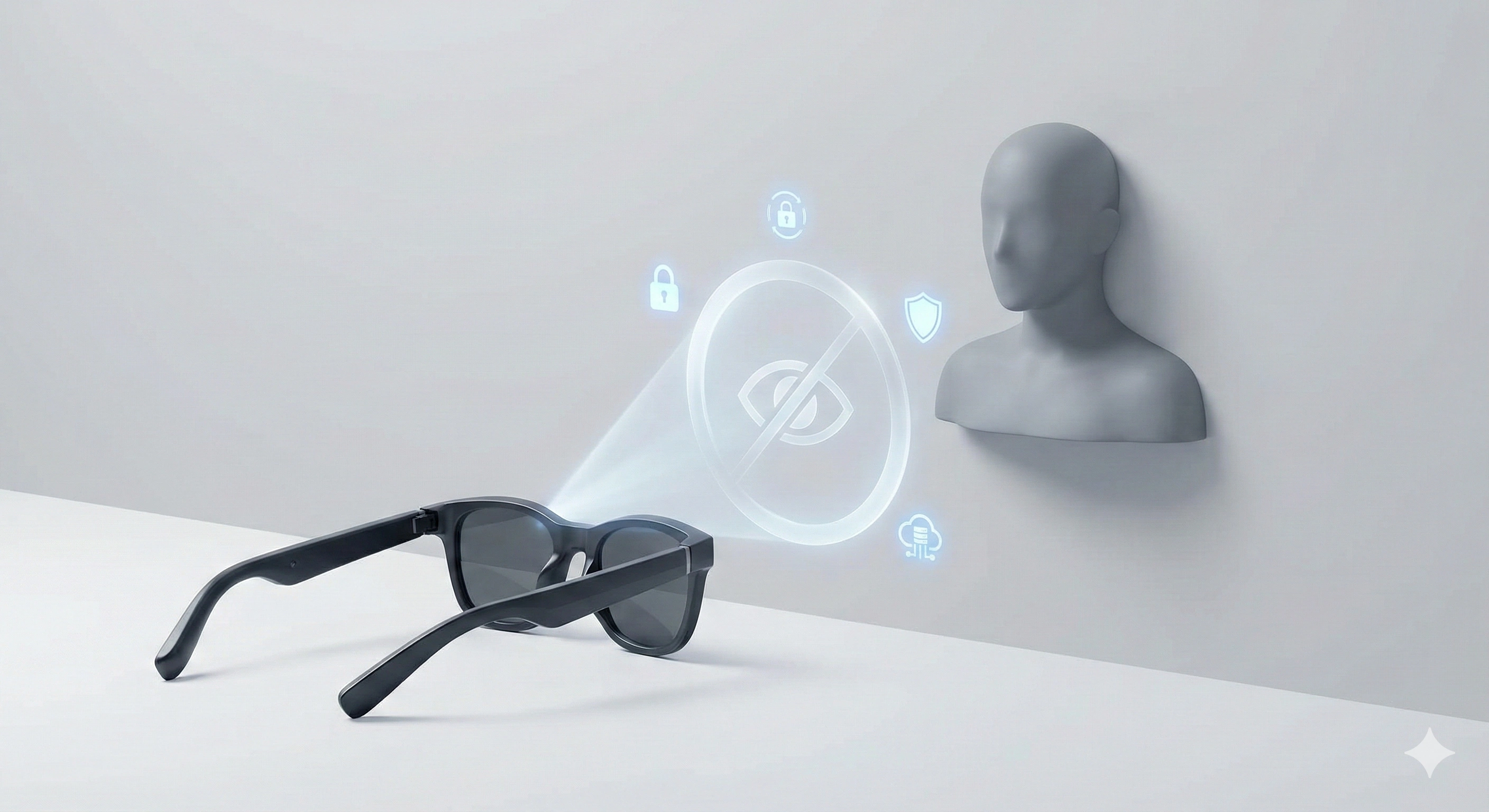

Meta is reportedly exploring face recognition capabilities for smart glasses, including a reported “Name Tag” concept that could enable identification in everyday settings. For marketers, the risk profile shifts from mostly online tracking and inference to in-the-moment recognition, which can raise stronger reactions around consent and social norms. Readiness means tightening governance for consent and disclosure, expanding brand safety to cover biometric-adjacent contexts, and minimizing any biometric-related data use in marketing workflows. It also means treating any future “identity/recognition” targeting or measurement as a high-risk measurement claim that requires clear methodology, limitations, and validation before it influences spend or strategy.

A readiness view: consent first, then brand safety and measurement validation.

Key takeaways

- Assume biometric-adjacent experiences can create brand risk without traditional ad placements.

- Default to consent and disclosure principles, and minimize any biometric-related data use in marketing workflows.

- Do not adopt “recognition/identity” measurement or targeting claims without demanding methodology and limitations.

- Add a creative QA step to pretest perceived intrusiveness and trust impact before scaling messaging near identity or recognition.

What’s reportedly coming to smart glasses and why it’s different

A report described Meta exploring face recognition for smart glasses, which would bring the possibility of identifying people in everyday settings closer to mainstream consumer wearables. From a marketing readiness perspective, the key issue is not whether a specific feature is available today, but how quickly the surrounding ecosystem could normalize “recognition” as a consumer interaction pattern.

The same reporting referenced a “Name Tag” concept. Treat that as a reported idea rather than a confirmed launch, product spec, or availability timeline. For marketers, the important takeaway is that “name-level” identification would be qualitatively different from personalization based on typical digital identifiers.

Principle-level distinction: ambient identification changes the context of consent. Digital identifiers often operate within screens, sessions, and apps. Smart-glasses recognition, by contrast, could be experienced as happening in the flow of real life, potentially outside a user’s immediate expectation that an identification event is occurring.

- Digital identifiers: tend to be mediated through logged-in experiences, cookies, device IDs, or platform-level signals.

- Ambient identification: can be perceived as “about a person” in a physical environment, not just “about a device” in an app.

Why this changes marketing risk: from data privacy to real-world identification

From contained, screen-based identifiers to ambient identification in shared spaces.

When identification moves into physical spaces, the potential for perceived creepiness increases because the interaction feels more personal and immediate. Even if a marketer is not directly using face recognition, the broader environment can shift consumer expectations about surveillance, autonomy, and whether they can reasonably avoid being identified.

This also changes how trust backlash can form. With online tracking, brand concerns often arise after the fact, when someone notices ads that feel too specific. With in-the-moment recognition, the discomfort may happen instantly and publicly, which can compress the timeline for negative sentiment.

Brand exposure can occur without an intentional campaign because risk can come from context adjacency. Examples at a principles level include:

- Being associated with an environment where recognition is occurring, even if the brand is only present through sponsorships, partnerships, retail presence, or influencer content.

- Messaging that references personalization, identity, or “knowing you” landing poorly when audiences are already sensitive to recognition technologies.

- Customer support or community channels receiving complaints about “tracking” that consumers loosely attribute to brands they interact with, regardless of technical responsibility.

Ethical considerations marketers can document and socialize internally include:

- Autonomy: people should feel they can move through spaces without unexpected identification.

- Consent expectations: what feels acceptable in an app may not feel acceptable in a physical setting.

- Social norms: even when something is technically possible, it may violate norms about anonymity in everyday life.

Separate but related, broader reporting about AI backlash underscores that consumer trust can be fragile when technology feels opaque or imposed. That makes clear communication and restraint more valuable, not less.

Governance checklist: consent, disclosure, and biometric-adjacent brand safety

.gif)

If face recognition enters consumer wearables, governance should start with consent and disclosure principles, documented in a way that teams can consistently enforce. The goal is to prevent “accidental” use cases where creative, media, CRM, or measurement teams drift into identity-adjacent claims without realizing the risk.

What to document and enforce (principle-level checklist):

- Clear definitions: define what your organization considers “biometric-adjacent” marketing, including any identity or recognition language in targeting, personalization, or measurement.

- Disclosure requirements: require plain-language explanations anytime an experience could reasonably be interpreted as identity-based personalization.

- Consent thresholds: set higher internal bars for opt-in and user control where identity or recognition is implied, even if a vendor frames it as “probabilistic” or “anonymous.”

- Review ownership: assign who signs off across legal, privacy, brand, comms, and analytics before any identity-adjacent activation goes live.

Minimize and compartmentalize any biometric-related data use in marketing processes. Even without making claims about specific regulations, a practical approach is to limit collection, limit access, and limit retention. Operationally, that can look like keeping sensitive signals out of general marketing tooling, restricting who can query or export any identity-adjacent datasets, and maintaining auditable decision logs for exceptions.

Brand safety definitions should expand beyond traditional content adjacency to include experiential contexts where people may be identified. That means updating suitability frameworks to consider whether an environment or partner experience could reasonably be perceived as enabling recognition of individuals, and whether your brand presence could be interpreted as endorsing it.

- Context red flags: “recognize people,” “identify anyone,” “name-level personalization,” or similar language in partner materials.

- Creative red flags: copy that implies knowing who someone is in a physical setting, or that blurs the line between personalization and identification.

- Placement red flags: activations that happen near entrances, queues, or real-world moments where audiences are sensitive to being observed.

Measurement and targeting guardrails: validate any “identity/recognition” claims

Treat identity-linked metrics as claims that must pass documented checks before use.

Any “identity/recognition” targeting or measurement should be treated as a high-risk measurement claim. That means you do not accept it as a black box KPI or narrative. You require methodology before it influences planning, budgeting, optimization, or reporting.

Minimum methodology questions to require before use:

- What is being matched, and at what level (device, account, household, individual), and what does the vendor explicitly not claim?

- What are the data sources and what are the assumptions that connect them?

- How is accuracy evaluated, and what is the known error profile?

- What is the time window for matching, and how are edge cases handled?

Require limitations and validation steps before accepting “recognition” performance narratives. At a practical level, that can mean insisting that any report includes clear caveats, defining where measurement is directional rather than exact, and running independent sanity checks.

- Limitations in writing: what the metric can and cannot prove.

- Validation plan: what internal checks you will run before scaling spend or adopting the metric as a primary success measure.

- Decision thresholds: what level of uncertainty triggers “do not use for optimization.”

Principle-level connection to adjacent channels: skepticism about identity matching accuracy has been reported in connected TV contexts, which is a useful reminder that “match rates” and “identity graphs” can be overstated. Even when a platform presents confident identity-linked numbers, marketers should treat them as claims that require scrutiny, not as ground truth.

Practical readiness: creative QA, pretesting, and response planning

Readiness is not only legal and measurement. It is also creative. Add a creative QA step focused on perceived intrusiveness and consent clarity before scaling any messaging that could be interpreted as identity-based, recognition-based, or “always-on” personalization.

A simple pretesting approach (principle-level) can include:

- Message variants: test direct personalization language versus neutral language to see which drives trust without triggering discomfort.

- Disclosure language: test how audiences respond to plain explanations of what is and is not happening.

- Trust impact signals: track qualitative feedback like “creepy,” “surveillance,” “too personal,” or “how do they know that?” alongside standard ad metrics.

Operational readiness matters because sentiment can shift quickly when technology stories break. Build a response plan that covers approvals, opt-out pathways, and escalation to communications and customer support if questions or complaints increase.

- Approvals: define who can pause identity-adjacent creative or placements quickly.

- Opt-out pathways: ensure customers can find and use preference controls without friction, and that support teams can explain them consistently.

- Escalation: prepare internal FAQs and routing for privacy and trust concerns so frontline teams are not improvising.

Sources

Frequently asked questions

What is the reported “Name Tag” feature for smart glasses and what could it imply for marketers?

Reporting described a “Name Tag” concept in the context of Meta exploring face recognition for smart glasses. Marketers should treat it as a reported idea rather than a confirmed product feature. The implication is that name-level identification in everyday settings would raise higher expectations for consent, clearer disclosure, and tighter brand safety and measurement standards than typical digital personalization.

How should marketers update consent and disclosure practices if face recognition enters consumer wearables?

Update governance so consent and disclosure are the default for any experience that could be interpreted as identity-based. Document what “biometric-adjacent” means internally, require plain-language disclosure when identity or recognition is implied, and set higher internal review and approval thresholds before launching related creative, partnerships, or measurement.

What brand safety rules should apply to biometric-adjacent contexts like face recognition?

Expand brand safety beyond content adjacency to include experiential contexts where people may be identified. Add suitability rules that flag identity or recognition language in partner materials, avoid placements or activations that could be perceived as endorsing real-world identification, and include creative QA to screen for copy that implies “knowing who someone is” in physical settings.

How do you validate platform claims about identity or recognition measurement before using them?

Treat identity or recognition metrics as measurement claims that require documentation. Ask for methodology, data sources, accuracy evaluation, and explicit limitations, then run internal validation and sanity checks before using the numbers for optimization or performance narratives. Keep the limitations in reporting, and be cautious of overstated “identity match” confidence, consistent with broader skepticism about identity matching accuracy in adjacent channels like CTV.

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)