Gemini Checkout + UCP: Privacy, Consent, and Measurement When Shopping Moves Into AI Assistants

.webp)

AI-assisted shopping and built-in checkout in assistants creates a new conversion surface where user inputs, intent signals, and transaction details may move across more parties and interfaces than a traditional site checkout. That intensifies privacy, consent, and disclosure questions because users may not clearly see who is collecting what data, for what purpose, and where the purchase is actually being completed. Marketing teams should map data flows across the assistant and retailer stack, align consent and disclosure to this new surface, and update governance so offers and recommendations do not produce misleading outcomes. For measurement, teams should redefine what counts as an assist versus a conversion and validate reporting assumptions end to end before treating assistant-mediated purchases as comparable to standard ecommerce conversions.

When shopping and checkout move into an assistant, data flows, consent expectations, and measurement boundaries shift.

Key takeaways

- Treat AI-assistant checkout as a distinct conversion surface with distinct privacy and disclosure risks.

- Build a simple, shared data map of inputs, intent signals, and transaction details across the assistant and commerce stack.

- Update governance for product claims, pricing, availability, and promo terms so AI outputs do not create trust or compliance issues.

- Re-define measurement definitions and validation steps for assistant-mediated discovery and checkout.

What is “agentic checkout” and what changed with Gemini shopping

“Agentic checkout” is a useful shorthand for a shopping journey where an AI assistant does more than answer questions. It can function as both a discovery layer (helping a user find and compare products) and a transaction layer (helping complete a purchase) within the same interface.

The shift to built-in checkout is more than a UI change. It creates a new conversion surface with its own data flows, consent expectations, and measurement mechanics. Instead of the conversion being anchored to a merchant site session, key steps may occur inside the assistant experience, which changes what marketers can observe and what users may understand about the transaction context.

You will often see this discussion alongside the Universal Commerce Protocol (UCP). At a high level, UCP is discussed as an attempt to standardize or streamline how commerce systems communicate so assistant-mediated shopping can work across more retailers and platforms. Because public descriptions can vary and implementations can evolve, treat UCP as a framing concept for interoperability rather than assuming specific technical behaviors without confirmation.

Why privacy and consent questions intensify when checkout happens inside an assistant

Map what data moves where, and place consent and disclosure at the handoff moments users may not notice.

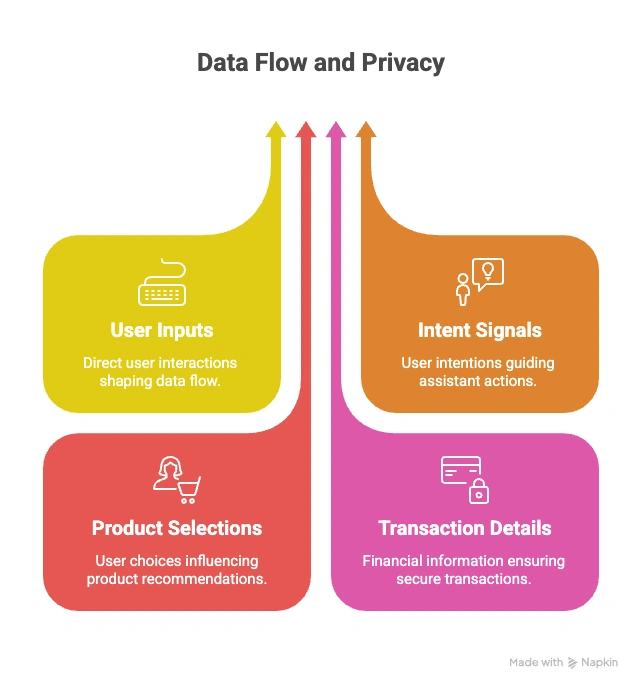

Assistant-mediated purchase flows can involve multiple data types that are privacy-relevant, including:

- User inputs such as prompts, preferences, constraints, and questions that may reveal sensitive intent.

- Intent signals inferred from conversation context, comparisons, or shortlists.

- Product selections including brand, model, size, color, and quantity choices.

- Transaction details such as shipping information, payment-related steps, and order confirmation artifacts.

Consent and disclosure questions can arise because the assistant and the retailer stack may each play a role in collecting, processing, or storing information. Users may experience the flow as “one conversation,” while data handling and responsibility may be distributed across systems. That mismatch can make it harder for users to understand what they are consenting to, and for organizations to ensure disclosures are clear at the moment the user is actually making a purchase decision.

User expectations in a chat interface can also differ from a traditional checkout. In a web checkout, the merchant identity, the cart, and the terms are typically explicit. In a conversational flow, the user may not always distinguish between assistant-generated suggestions, sponsored placements, retailer-provided product data, or the final merchant of record. Teams should assume expectations will be shaped by what the interface makes visible: who the user believes they are “talking to,” where the purchase is happening, and how clearly price, terms, and data use are presented.

Practical guidance that does not depend on any single platform implementation:

- Minimize surprise. Align data collection and use with what a reasonable user would expect from the step they are on (browsing vs. paying vs. post-purchase support).

- Use layered disclosure. Provide short, plain-language disclosures at key moments, with a path to fuller details when needed.

- Separate convenience from consent. An easier checkout should not blur whether the user has agreed to specific data uses beyond completing the transaction.

Governance for offers, pricing, and recommendations: reducing “manipulative” outcomes

When an assistant can recommend products and potentially streamline checkout, governance has to cover not only what is true in the product catalog, but also how that information is presented in conversational outputs. Small mismatches can create outsized trust issues because users may rely on the assistant’s phrasing rather than verifying details themselves.

Start by standardizing the inputs that drive AI outputs. Ensure you have a controlled source of truth for:

- Product claims that are allowed to be stated as facts (and which require qualification).

- Price and how it is computed (base price vs. discounts, taxes, shipping, membership pricing).

- Availability (in stock, backorder, delivery dates) and how quickly it updates.

- Promo terms such as eligibility, expiration, and exclusions.

Next, define internal rules for acceptable persuasion. Even without making platform-specific claims, marketers can set clear guardrails for how urgency and framing should work in assistant outputs:

- Urgency and scarcity language should be tied to verifiable conditions (for example, a real promotion end time or inventory state), and avoid overstating certainty.

- Offer presentation should clearly communicate what is included, what conditions apply, and what the user will pay, using consistent language across channels.

- Recommendation rationale should avoid implying an endorsement that cannot be supported. Keep phrasing aligned to available attributes and user-stated preferences.

Finally, build a review and escalation path. Because assistant outputs can vary by prompt, create a lightweight process to detect and respond to misleading or mismatched outputs:

- Monitoring via prompt testing for high-volume categories, top offers, and sensitive claim areas.

- Escalation to the correct owner (merchandising, legal, privacy, customer support) based on the issue type.

- Correction by updating the underlying source data and documenting what changed, so the fix is durable and auditable.

Measurement readiness: redefining sessions, assists, and conversions in AI commerce

Define session, assist, and conversion—and verify event lineage and deduplication across systems.

Assistant-mediated shopping disrupts traditional measurement assumptions. If discovery, evaluation, and checkout occur across an assistant and retailer stack, the familiar boundaries of a “session” or “conversion” can blur. Teams should align early on definitions that are usable for analysis and consistent across stakeholders.

Agree on definitions that reflect an assistant-mediated journey:

- Session: what starts and ends a measurable shopping interaction in the assistant context (time-based, intent-based, or checkout-based boundaries).

- Assist: what counts as meaningful influence by the assistant, such as product shortlisting, comparison, or a handoff into a purchase step.

- Conversion: what constitutes a completed purchase, and whether it is defined by an order confirmation event, a retailer receipt, or another verifiable completion signal.

Then validate reporting assumptions end to end. The goal is to know exactly what is counted, where, and by whom, before you build strategy on top of the numbers. Borrow a measurement mindset used in other complex marketplaces: insist on clarity about mechanics rather than relying on black-box reporting.

- Event lineage checks: trace a single purchase from user action to recorded conversion, confirming which system generated each event.

- Deduplication rules: confirm whether the same transaction can be counted more than once across assistant, retailer analytics, and ad tech reporting.

- Attribution boundaries: document what you can validly attribute versus what is directional due to missing visibility across surfaces.

Document limitations and gaps for stakeholders. If your reporting cannot distinguish between assistant influence and merchant-site behavior, say so. If you cannot observe certain steps in the assistant flow, treat performance conclusions as conditional. The practical goal is not perfect measurement, but stable measurement that is honest about uncertainty and comparable over time.

A practical readiness checklist (privacy, disclosure, and measurement validation)

This checklist is designed to be lightweight and principles-based so it can be applied even as assistant checkout experiences change.

1) Create a data map and RACI for ownership and access

- List the data types involved: user inputs, intent signals, product selection data, and transaction details.

- For each data type, document who collects it, who processes it, where it is stored, and who can access it.

- Assign a simple RACI (Responsible, Accountable, Consulted, Informed) across privacy, legal, product, analytics, and marketing operations.

2) Consent and disclosure checklist for assistant-mediated purchase flows

- Confirm disclosures are clear at the moment the user is making a purchase decision, not only in general policies.

- Ensure the user can understand who the merchant is and what terms apply to pricing, promotions, and fulfillment.

- Validate that consent choices are respected across the stack, especially if data moves between assistant and retailer systems.

- Review retention and secondary-use assumptions for conversational inputs and intent signals, and avoid using them in ways that a user would not expect from the interaction.

3) Incident protocol for mismatches and misleading outputs

- Define triggers: price mismatches, promo eligibility errors, availability inaccuracies, and misleading recommendation framing.

- Set monitoring routines: periodic prompt-based QA for top offers and categories, plus escalation channels from customer support.

- Establish correction steps: fix source data, note downstream dependencies, and communicate changes to relevant teams.

- Log incidents and resolutions so you can spot patterns, improve governance, and reduce repeat failures.

Sources

Frequently asked questions

What does Gemini checkout mean for user privacy?

When checkout moves into an AI assistant, the purchase journey can involve additional data types and handoffs, such as conversational inputs, inferred intent signals, product selections, and transaction details. That can intensify privacy questions because users may experience one interface while multiple systems handle data behind the scenes. The practical implication is the need for clear disclosures, strong consent practices, and an internal map of who collects and uses which data across the assistant and retailer stack.

What is Google’s Universal Commerce Protocol (UCP) in plain terms?

UCP is discussed as a way to standardize or streamline how commerce systems communicate so assistant-mediated shopping can work across more retailers and platforms. In plain terms, it is often framed as an interoperability layer for commerce interactions. Because details can vary and evolve, focus on the planning implication: if shopping becomes more standardized across systems, you will need consistent data governance, disclosures, and measurement definitions across that ecosystem.

How should marketers handle consent and disclosure when purchases happen in an AI assistant?

Treat assistant checkout as its own conversion surface and design disclosures for the moments that matter: when a user is deciding and when they are paying. Keep disclosures plain-language and layered, clarify who the merchant is and what terms apply, and ensure consent choices are honored across connected systems. Internally, maintain a data map and ownership model so teams can answer what data is collected, where it goes, and why it is used.

How do you measure conversions when an AI assistant mediates discovery and checkout?

Start by agreeing on definitions for session, assist, and conversion that reflect an assistant-mediated journey. Then validate reporting end to end by tracing events, checking deduplication, and documenting attribution limits. The goal is measurement you can explain to stakeholders, including what is counted, where it is counted, and what gaps prevent direct comparison to traditional ecommerce reporting.

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)